Research on speech recognition model for railway passenger service application

-

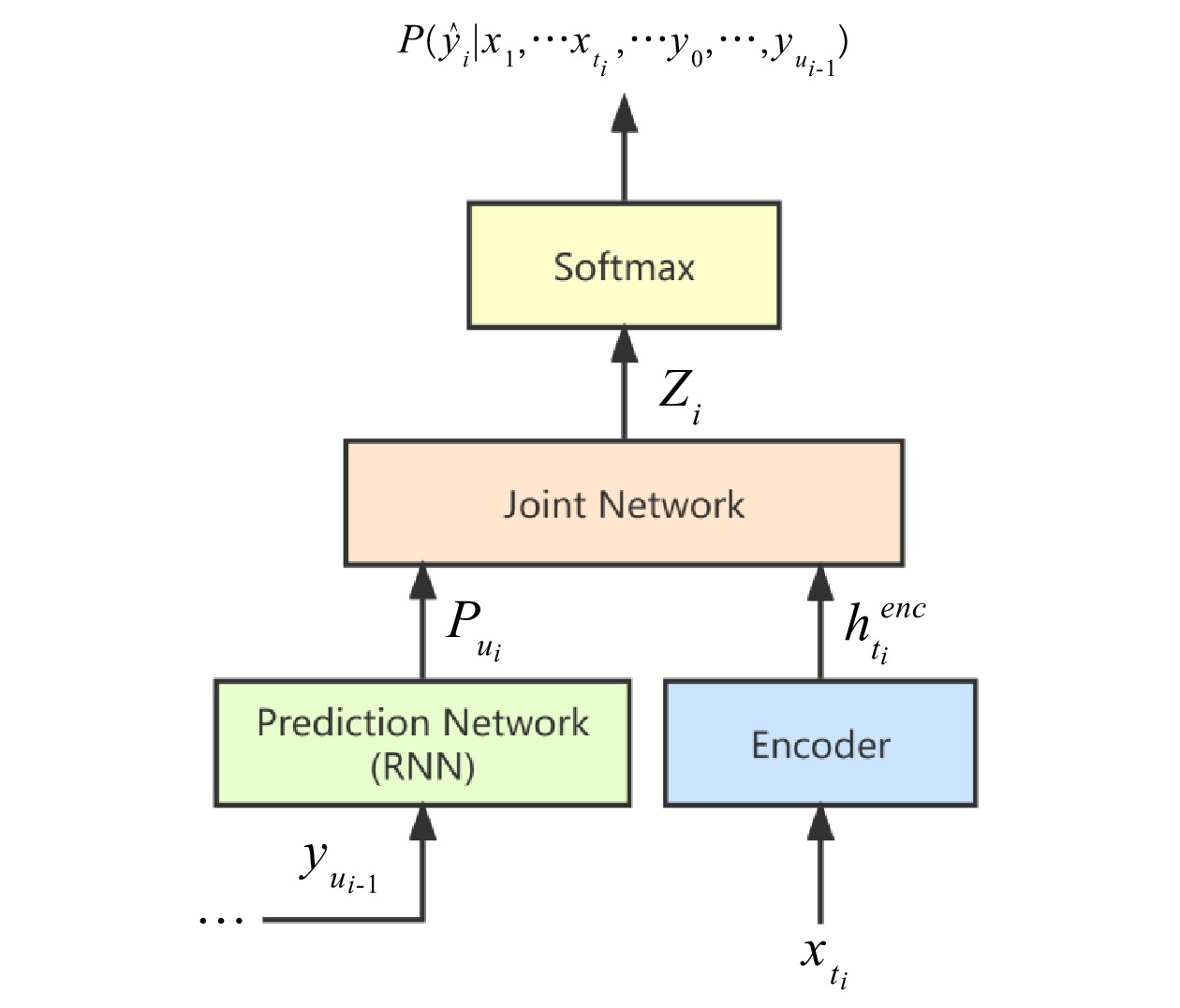

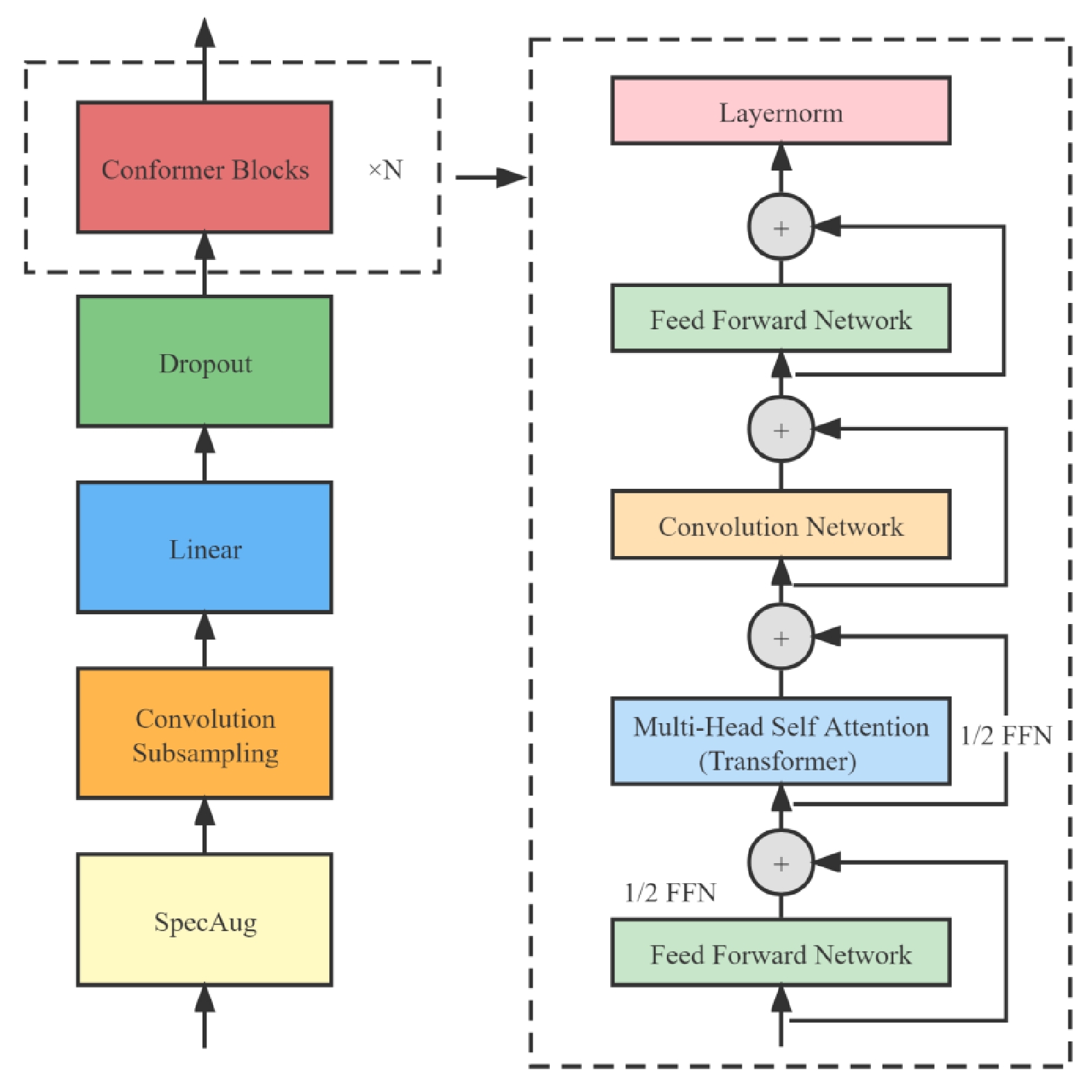

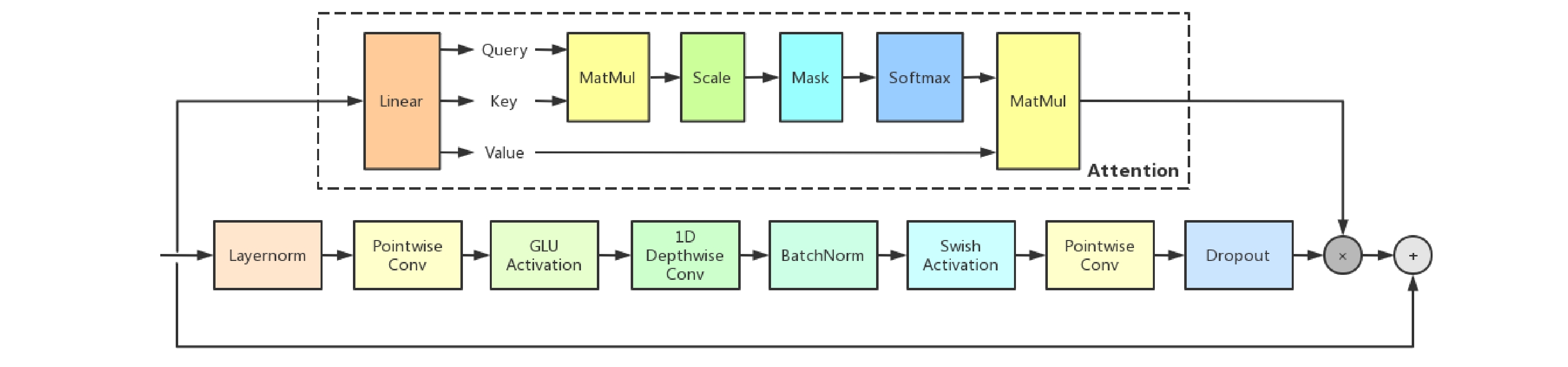

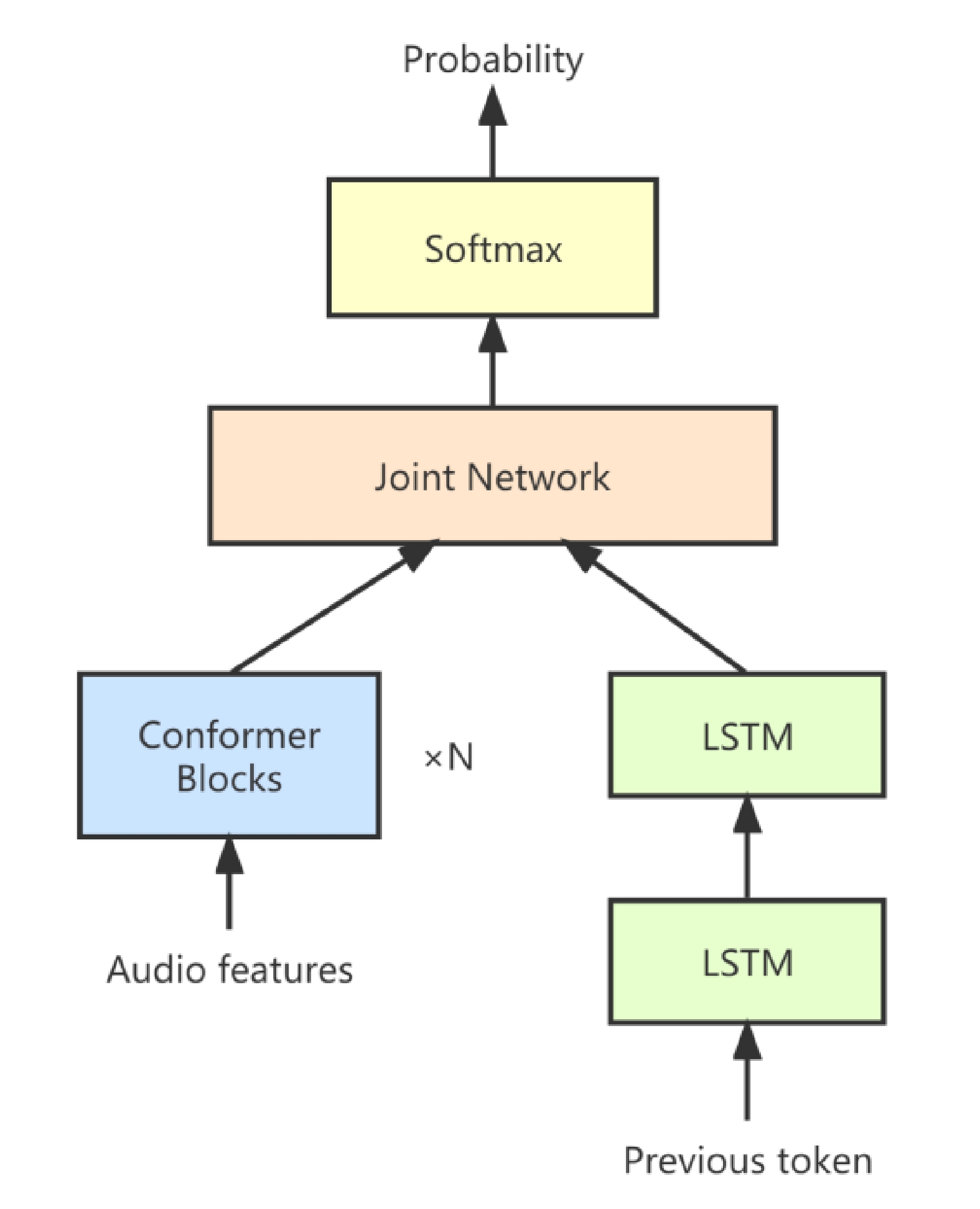

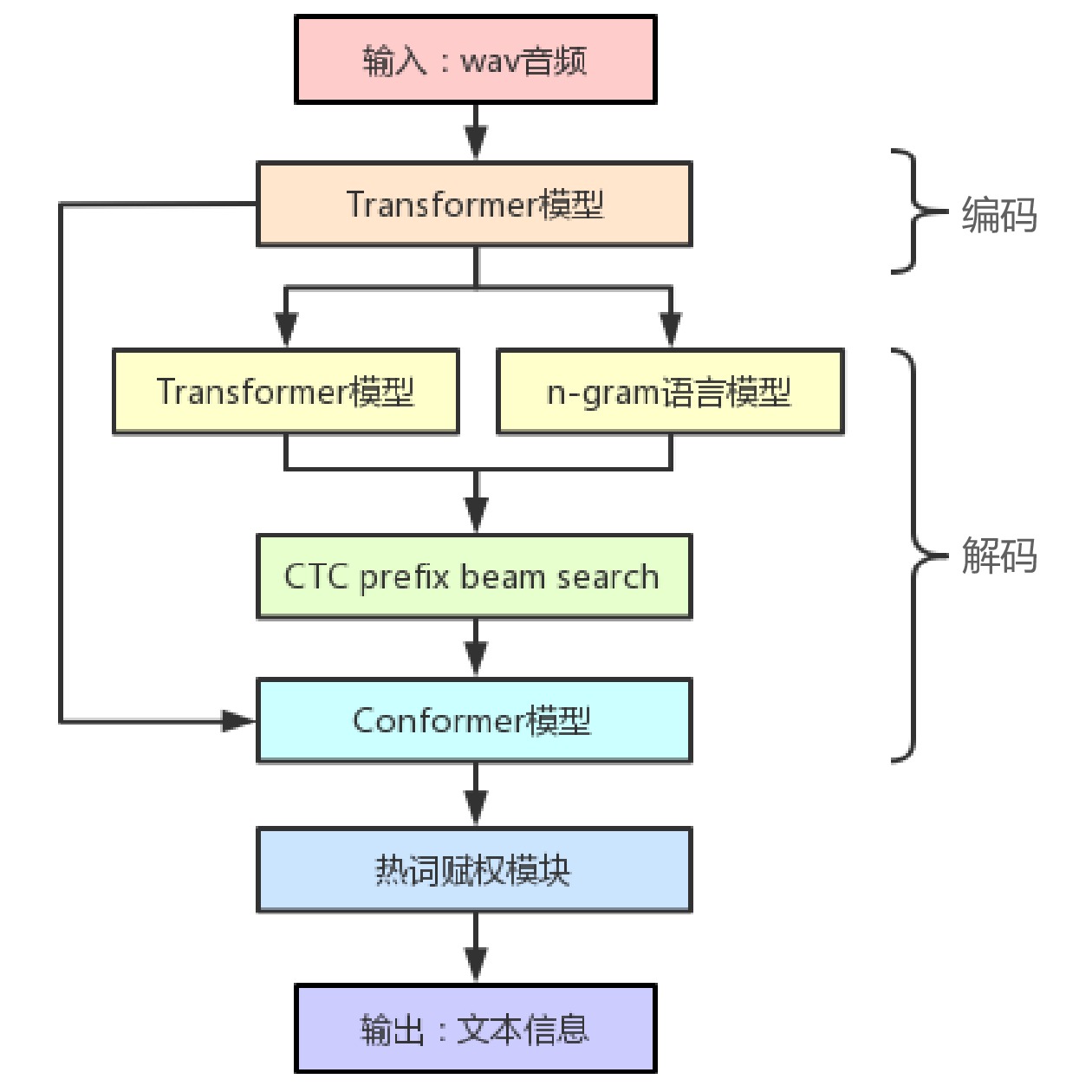

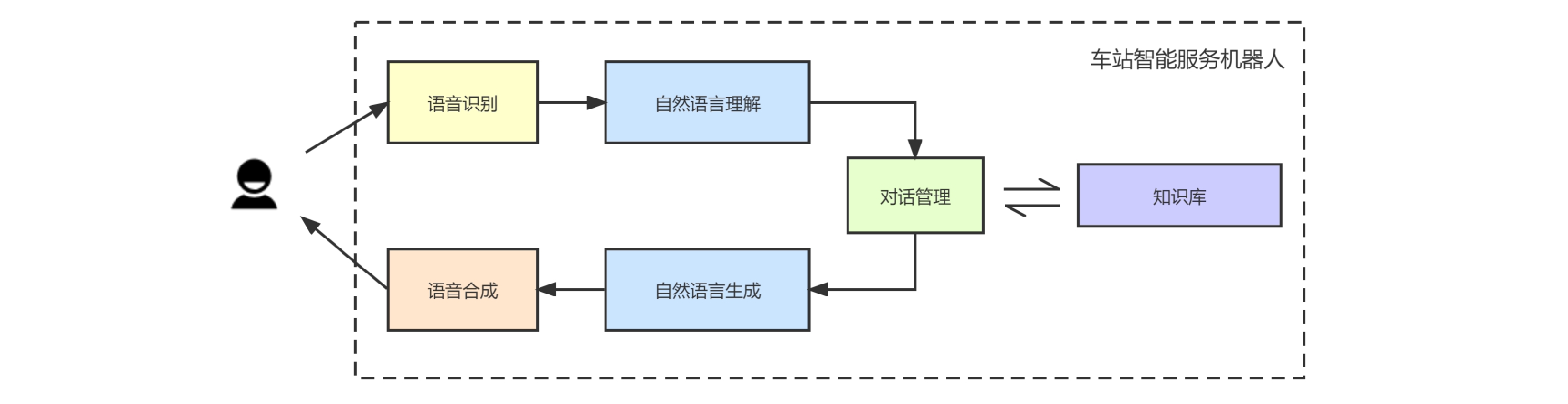

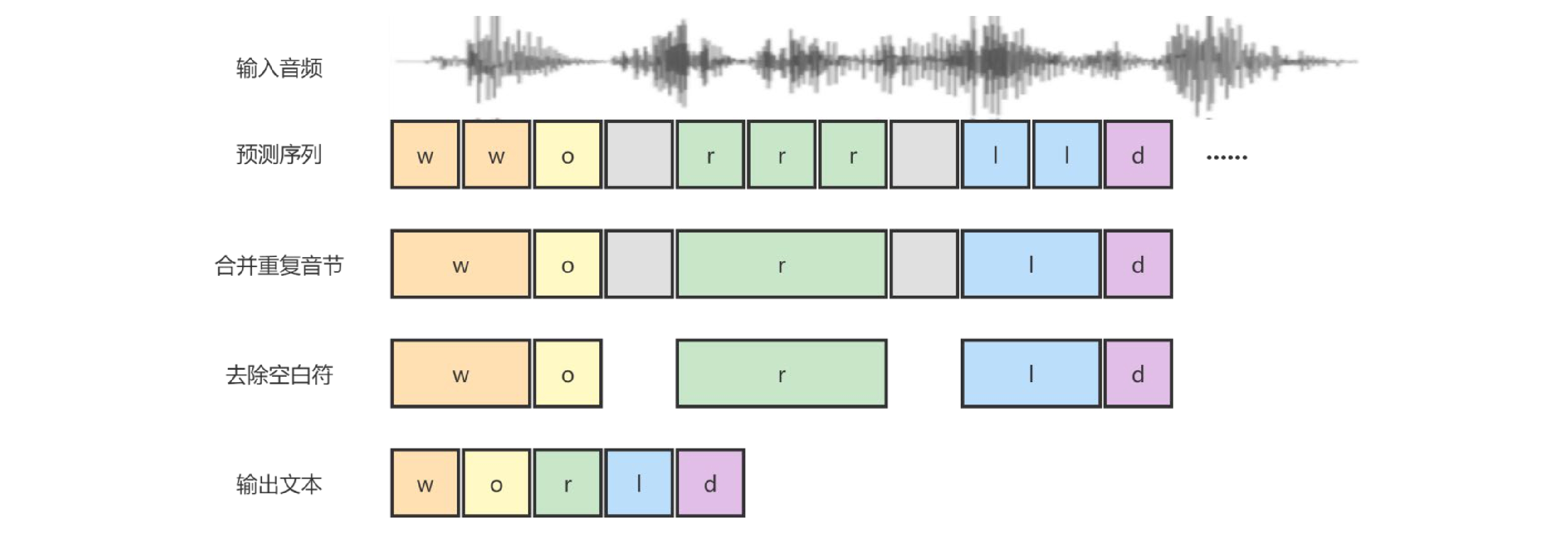

摘要: 为扩大面向铁路旅客服务的语音识别应用,文章研究适用于铁路旅客服务应用的语音识别模型,使用基于卷积增强的Conformer编码结构和RNN-T模型结构,构建基于Conformer-Transducer的语音识别模型。由于卷积网络容易忽视输入信号整体与局部间关联,在Conformer结构中的卷积模块加入注意力机制,用以修正卷积模块的计算结果。构建铁路旅客服务语音数据集,对改进的语音识别模型进行测评;结果表明:改进后的语音识别模型准确率达到92.09%,相较于一般的Conformer-Transducer模型,语音识别字错误率降低0.33%。鉴于铁路旅客服务涉及铁路出行条例、旅客常问问题等众多文本信息,在语音识别模型中融入语言模型与热词赋权2种文本处理机制,使其在铁路专有名词的识别上优于通用的语音识别算法;文章研究提出的语音识别模型已应用于旅客常问问题查询设备和车站智能服务机器人,有助于提高铁路旅客服务水平,改善铁路旅客出行体验,促进铁路旅客服务工作实现减员增效。Abstract: In order to promote the application of speech recognition for railway passenger services, a study on speech recognition model for railway passenger service applications is made, in which the Conformer encoder structure based on convolution enhancement and the RNN-Transducer model structure are used to realize the Conformer-Transducer speech recognition model. Since the convolution neural networks tend to ignore the association between the whole signal and a signal sequence, the convolution module in the Conformer structure are improved and the attention mechanism is added to the convolution module for modifying the calculation results of the convolution module. A speech data set of railway passenger service is built to test and evaluate the improved model and the results show that the accuracy of the improved speech recognition model can reach 92.09% and the error rate of speech recognition is reduced by 0.33% compared with the general Conformer-Transducer model. Because railway passenger services involves specific text information, such as railway travel regulations and frequently asked questions by the passengers, a text processing mechanism, language model or weighting of hot words, is then integrated into the speech recognition model, which enable the model recognize railway-specific terms better than other speech recognition algorithims. This speech recognition model has been applied in passenger FAQ inquiry equipment and intelligent station service robot, which is conducive not only to enhance the level of railway passenger services and improve railway passenger travel experience but also to facilitate downsizing the staff and increasing the work efficiency of railway passenger service.

-

-

表 1 数据集统计信息

数据集划分 语音时长 / h 语音-文本数据对 / ×103对 训练集 527 236.2 测试集 219 91.4 表 2 实验环境配置

实验环境 配置 操作系统 Linux CPU型号 Inter(R) Xeon(R) CPU E5-2698 v4 @ 2.20 GHz GPU型号 Tesla V100 运行内存 251 GB 程序语言 Python 程序框架 Pytorch 表 3 2种语音识别模型的参数规模设置

模型 Params(B) Layers Dimension Attention Heads Conformer small 0.6 34 1024 8 Conformer big 1.0 36 1024 8 表 4 RNN-T基线模型、T-T模型和改进前后的C-T模型的测评结果

模型 CER/% 与基线模型差值/% 基线模型 9.13 − T-T 8.59 −0.54 C-T (Conv) small 8.24 −0.89 C-T (Conv) big 8.15 −0.98 C-T (Conv+Attention) small 7.98 −1.15 C-T (Conv+Attention) big 7.91 −1.22 -

[1] Lawrence R. Rabiner. A Tutorial on Hidden Markov Models and Selected Applications in Speech Recognition [J]. Proceeding of the IEEE, 1989, 77(2): 257-286. DOI: 10.1109/5.18626

[2] Reynolds D. A. and Rose Richard. Robust Text-Independent Speaker Identification Using Gaussian Mixture Speaker Models [J]. IEEE Transactions on Speech and Audio Processing, 1995, 3(1): 72-83. DOI: 10.1109/89.365379

[3] Thomas Epelbaum. Deep learning: Technical introduction [J]. arXiv prepeint arXiv:, 1709, 01412: 2017.

[4] Alex Graves, Santiago Fernandez, Faustino Gomez, et al. Connectionist Temporal Classification: Labelling Unsegmented Sequence Data with Recurrent Neural Networks[C]//In the proceeding of the 23rd International Conference on Machine Learning. Pittsburgh, Pennsylvania, USA: ACM, 2006: 369-376.

[5] Alex Graves. Sequence Transduction with Recurrent Neural Networks [J]. arXiv prepeint arXiv:, 1211, 3711: 2012.

[6] CHAN W, JAITLY N, LE Q, et al. Listen Attend and Spell: A Neural Network for Large Vocabulary Conversational Speech Recognition[C]//In the proceeding of 2016 IEEE International Conference on Acoustics, Speech and Signal Processing. Shanghai, China: IEEE, 2016: 4960-4964.

[7] Qian Zhang, Han Lu, Hasim Sak, et al. Transformer Transducer: A Streamable Speech Recognition Model Transformer Transducer With Transformer Encoders And RNN-T Loss[C]//In the proceeding of 2020 IEEE International Conference on Acoustics, Speech and Signal Processing. Barcelona, Spain: IEEE, 2020: 7829-7833.

[8] Sundermeyer Martin, Schlüter Ralf, Ney Hermann. LSTM Neural Networks for Language Modeling[C]//In the proceeding of INTERSPEECH 2012, 13th Annual Conference of the International Speech Communication Association. Portland, Oregon, USA, 2012: 194-197.

[9] Ashish Vaswani, Noam Shazeer, Niki Parmar, et al. Attention Is All Your Need[C]//In the proceeding of the 31st International Conference on Nerual Information Processing Systems. Los Angeles, USA: MIT Press, 2017: 6000-6010.

[10] Anmol Gulati, James Qin, Chung-Cheng Chiu, et al. Conformer: Convolution-augmented Transformer for Speech Recognition[C]//In the proceeding of INTERSPEECH 2020, 21th Annual Conference of the International Speech Communication Association. Shanghai, China, 2020: 5036-5040.

-

期刊类型引用(3)

1. 刘文韬,牛青坡,宋阳. 基于可视化技术的铁路运营条件信息管理系统设计. 铁路计算机应用. 2021(01): 62-66 .  本站查看

本站查看

2. 胡德忠,张铁志,周浩. 内燃机车轮缘磨耗预测与健康度评价初探. 哈尔滨铁道科技. 2021(01): 19-26 .  百度学术

百度学术

3. 焦雄风,马龙,金卫峰,陈铮,张献州. 运营高铁重点监测地段云评估系统设计与实现. 铁道勘察. 2021(04): 43-47 .  百度学术

百度学术

其他类型引用(1)

下载:

下载: